%%2023-12-17%%

Project : Capstone II

You are hired as a DevOps Engineer for Analytics Pvt Ltd. This company is a product based organization which uses Docker for their containerization needs within the company. The final product received a lot of traction in the first few weeks of launch. Now with the increasing demand, the organization needs to have a platform for automating deployment, scaling and operations of application containers across clusters of hosts. As a DevOps Engineer, you need to implement a DevOps lifecycle such that all the requirements are implemented without any change in the Docker containers in the testing environment.

Up until now, this organization used to follow a monolithic architecture with just 2 developers. The product is present on: website.git

Following are the specifications of the lifecycle:

- Git workflow should be implemented. Since the company follows a monolithic architecture of development, you need to take care of version control. The release should happen only on the 25th of every month.

- CodeBuild should be triggered once the commits are made in the master branch.

- The code should be containerized with the help of the Dockerfile. The Dockerfile should be built every time if there is a push to GitHub. Create a custom Docker image using a Dockerfile.

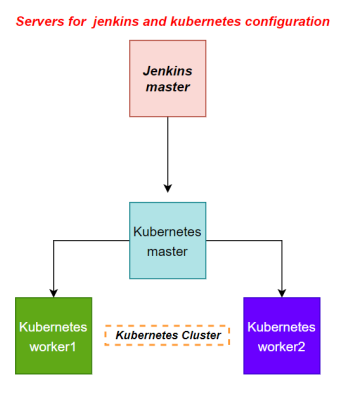

- As per the requirement in the production server, you need to use the Kubernetes cluster and the containerized code from Docker Hub should be deployed with 2 replicas. Create a NodePort service and configure the same for port 30008.

- Create a Jenkins Pipeline script to accomplish the above task.

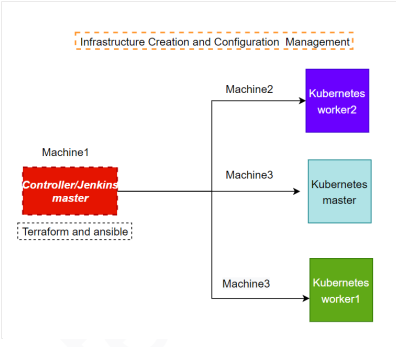

- For configuration management of the infrastructure, you need to deploy the configuration on the servers to install necessary software and configurations.

- Using Terraform, accomplish the task of infrastructure creation in the AWS cloud provider.

Architectural Advice: Softwares to be installed on the respective machines using configuration management. Worker1: Jenkins, Java Worker2: Docker, Kubernetes Worker3: Java, Docker, Kubernetes Worker4: Docker, Kubernetes

%%

I installed Jenkins on the Jenkins_Terraform_Ansible EC2 instance using the script Jenkins_terraform_ansible.sh (updated it ). However, I encountered an issue with Jenkins freezing. To resolve this, I set up a new EC2 instance with Ubuntu 22 and installed the newest version of Jenkins, following the official documentation.

Keep in mind the Java version in Jenkins Host and Agent need to be the same%%

Terraform

I create an EC2 instance named Jenkins_Terraform_Ansible

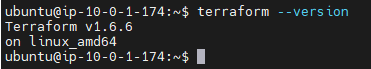

Install Terraform:

wget -O- https://apt.releases.hashicorp.com/gpg | sudo gpg --dearmor -o /usr/share/keyrings/hashicorp-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/hashicorp.list

sudo apt update && sudo apt install terraform -y

For Terraform to authenticate with AWS, I will create a file named ~/.aws/credentials that contains my AWS access key and secret key. The AWS provider will automatically pick up the credentials from this shared credentials file.

[default]

aws_access_key_id = your_access_key

aws_secret_access_key = your_secret_keyI’ll provision 3 “Ubuntu 20.04 LTS” EC2 instances with the following main.tf

provider "aws" {

region = "us-east-1"

}

resource "aws_instance" "K8-M" {

ami = "ami-06aa3f7caf3a30282" #Ubuntu 20.04 LTS

instance_type = "t2.medium"

key_name = "daro.io"

subnet_id = "subnet-05419866677eb6366"

tags = {

Name = "Kmaster"

}

}

resource "aws_instance" "K8-S1" {

ami = "ami-06aa3f7caf3a30282"

instance_type = "t2.micro"

key_name = "daro.io"

subnet_id = "subnet-05419866677eb6366"

tags = {

Name = "Kslave1"

}

}

resource "aws_instance" "K8-S2" {

ami = "ami-06aa3f7caf3a30282"

instance_type = "t2.micro"

key_name = "daro.io"

subnet_id = "subnet-05419866677eb6366"

tags = {

Name = "Kslave2"

}

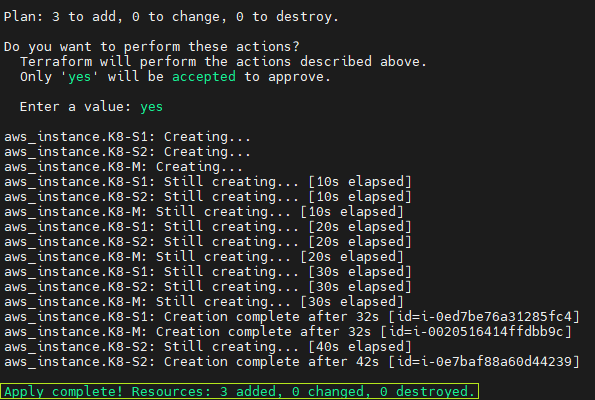

}I use the following commands to initiate the AWS plugin, view the plan, and apply it in Terraform:

terraform init

terraform plan

terraform apply

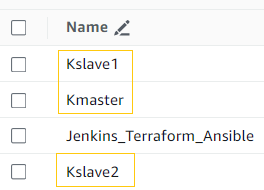

My 3 new instances are “Kmaster”, “Kslave1” and “Kslave2”

Ansible

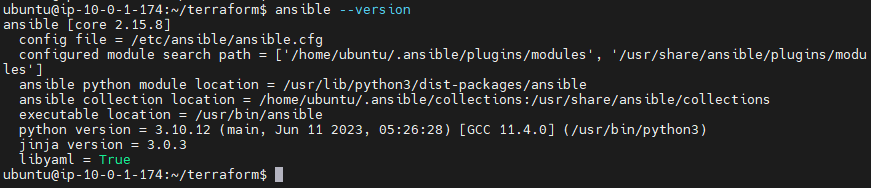

Install Ansible on the Jenkins_Terraform_Ansible

sudo apt update

sudo apt install software-properties-common

sudo add-apt-repository --yes --update ppa:ansible/ansible

sudo apt install ansible -y

Creating an Inventory

I create a file inventory.ini

%%Create inventory for ansible%%

[all:vars]

ansible_user=ubuntu

[kmaster]

Kmaster ansible_host=10.0.1.52

[kslave]

Kslave1 ansible_host=10.0.1.67

Kslave2 ansible_host=10.0.1.119^8173dd

SSH setup

%%reference: setup ssh%%

To enable Ansible to connect to the nodes in my inventory, I recreated the key daro.io.pem on the server and ran ssh-agent. This allows me to connect without having to specify the key for the remainder of the session. The commands I use are:

eval $(ssh-agent -s)

sudo chmod 600 daro.io.pem

ssh-add daro.io.pem

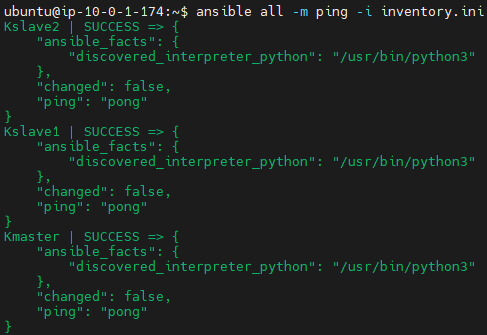

ssh-add -lI test connection

ansible all -m ping -i inventory.ini

Playbook and scripts

graph TD

playbook.yaml -- on localhost --> Jenkins_terraform_ansible.sh

playbook.yaml -- on Kmaster --> Kmaster.sh

class playbook.yaml,Jenkins_terraform_ansible.sh,Kmaster.sh internal-link;

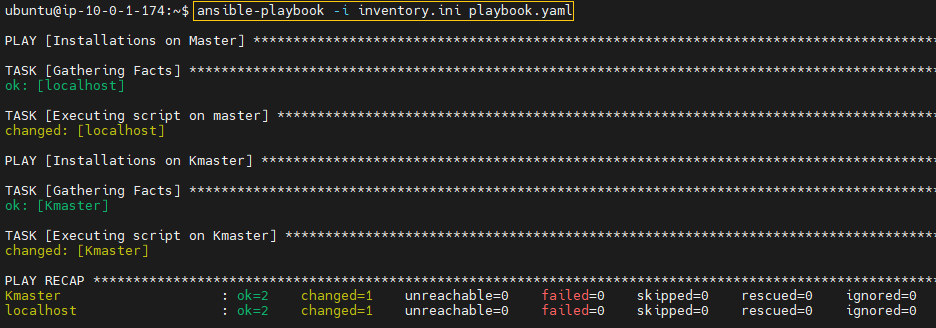

ansible-playbook -i inventory.ini playbook.yaml --check

ansible-playbook -i inventory.ini playbook.yaml

Repo

I forked: website.git

I clone to my machine where I created 3 new files to push to repo:

git clone https://github.com/hectorproko/website.git

Dockerfile

FROM ubuntu

RUN apt update

RUN apt-get install apache2 -y

ADD . /var/www/html

ENTRYPOINT apachectl -D FOREGROUNDThis Dockerfile creates a Docker image based on Ubuntu that installs and runs the Apache web server, serving the contents of website from the /var/www/html directory within the container.

Incorporate files to remote repo

I use the following git commands:

git status

git add .

git commit -m “some title”

git branchKubernetes

I install Kubeadm (just like in Assignment 1 – Kubernetes) in the instances that will compose my Kubernetes cluster (Kmaster, Kslave1, Kslave2)

I run kubectl get nodes in Kmaster to verify the cluster. The following shows I still need a networking plugin

ubuntu@ip-10-0-1-174:~$ ssh ubuntu@10.0.1.52 'kubectl get nodes'

NAME STATUS ROLES AGE VERSION

ip-10-0-1-119 NotReady <none> 96s v1.28.2

ip-10-0-1-52 NotReady control-plane 9m39s v1.28.2

ip-10-0-1-67 NotReady <none> 2m7s v1.28.2

ubuntu@ip-10-0-1-174:~$I install the networking plugin:

ssh ubuntu@10.0.1.52 'kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml'Once again I verify the cluster and “STATUS” is now ready:

ubuntu@ip-10-0-1-174:~$ ssh ubuntu@10.0.1.52 'kubectl get nodes'

NAME STATUS ROLES AGE VERSION

ip-10-0-1-119 Ready <none> 7m39s v1.28.2

ip-10-0-1-52 Ready control-plane 15m v1.28.2

ip-10-0-1-67 Ready <none> 8m10s v1.28.2Jenkins

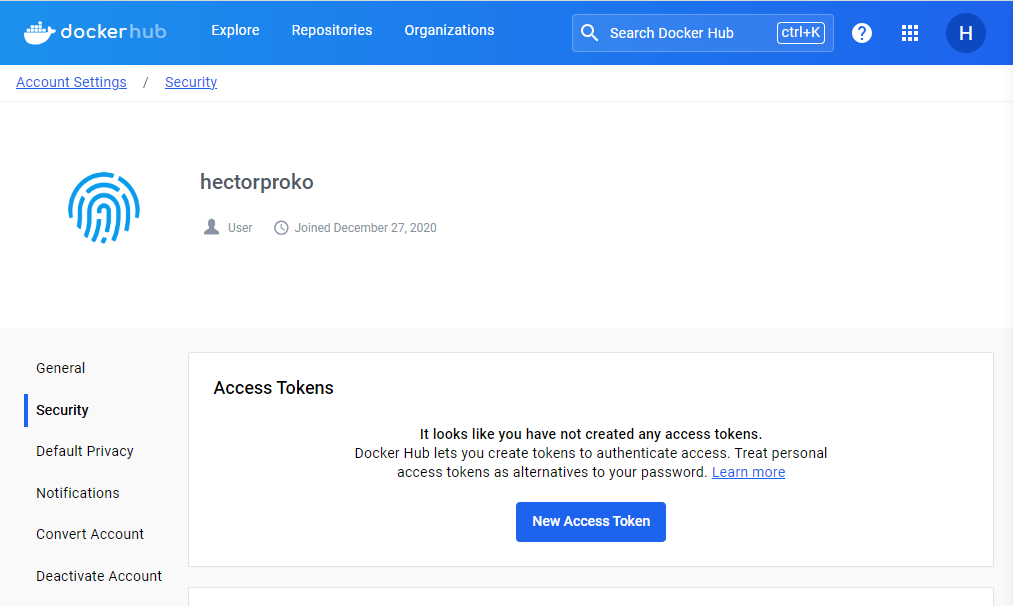

I access Jenkins through the browser using to set it up with default plugins, user, credentials and agents just like in Assignment 1 – Jenkins

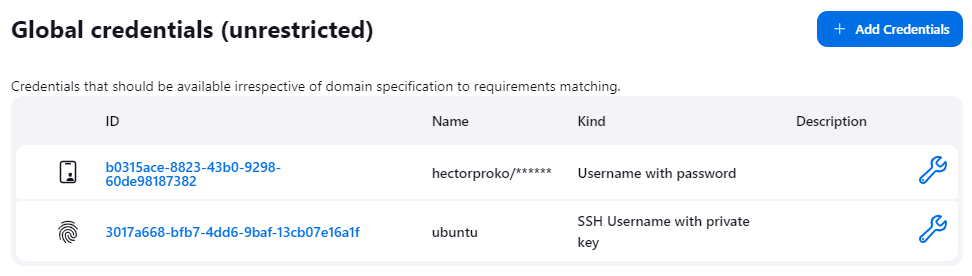

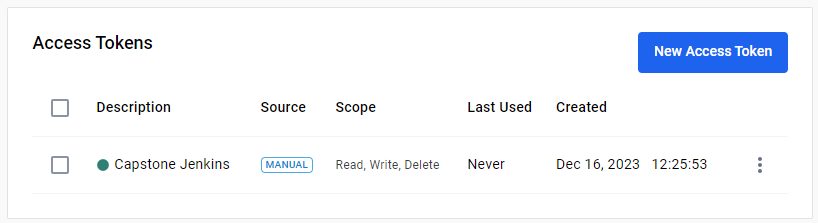

Created “docker” and “ssh” credentials

For docker I use a “token” generated in dockerhub.io

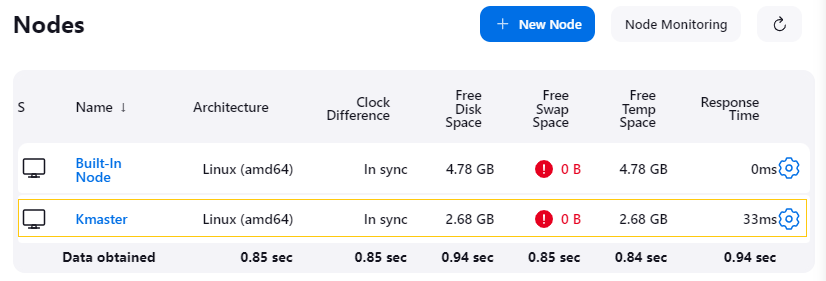

Added Agent “Kmaster”

%%add agents%%

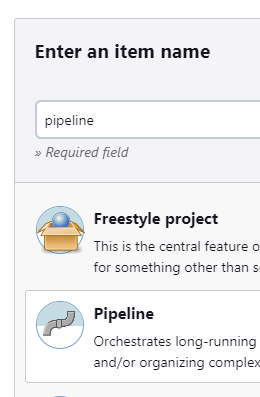

I create a job of type “Pipeline”

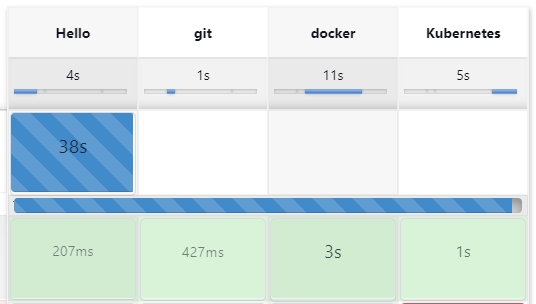

pipeline {

agent none

environment {

DOCKERHUB_CREDENTIALS = credentials('b0315ace-8823-43b0-9298-60de98187382')

}

stages {

stage('Hello') {

agent {

label 'KMaster'

}

steps {

echo 'Hello World'

}

}

stage('git') {

agent {

label 'KMaster'

}

steps {

git 'https://github.com/hectorproko/website.git'

}

}

stage('docker') {

agent {

label 'KMaster'

}

steps {

echo "test"

sh 'sudo docker build /home/ubuntu/jenkins/workspace/pipeline -t docker6767/image'

sh 'sudo echo $DOCKERHUB_CREDENTIALS_PSW | sudo docker login -u $DOCKERHUB_CREDENTIALS_USR --password-stdin'

sh "sudo docker tag docker6767/image:latest hectorproko/from_jenkins:${env.BUILD_NUMBER}"

sh "sudo docker push hectorproko/from_jenkins:${env.BUILD_NUMBER}"

}

}

stage('Kubernetes') {

agent {

label 'KMaster'

}

steps {

echo "test"

sh 'kubectl create -f deploy.yml'

sh 'kubectl create -f svc.yml'

}

}

}

}The script leverages an environment variable for Docker Hub credentials and specifies a ‘KMaster’ agent for executing each stage”

- Hello: Prints “Hello World” to demonstrate a basic step.

- Git: Clones a Git repository from a specified URL.

- Docker: Builds a Docker image from the workspace, logs into Docker Hub using stored credentials, tags the image with the build number, and pushes it to a Docker repository.

- Kubernetes: Deploys the application to Kubernetes using

kubectlcommands, referencing deployment and service configuration files.

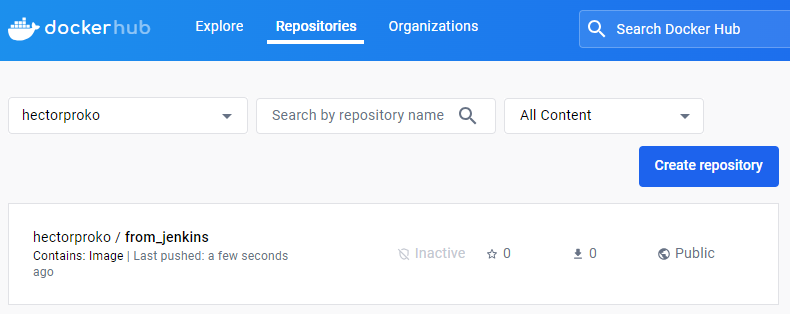

Once the job runs successfully it creates the repo in dockerhub.io

Once we open we see the different version of the image. With

Once we open we see the different version of the image. With ${env.BUILD_NUMBER} I change the tag of the image to reflect the build number before uploading it

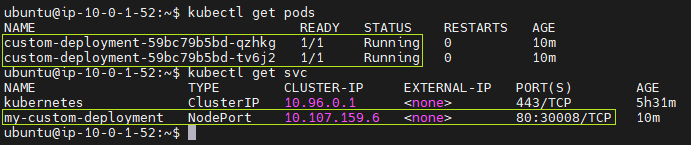

In Kmaster: I see two pod replicas as specified in deploy.yml and the service as defined in svc.yml.

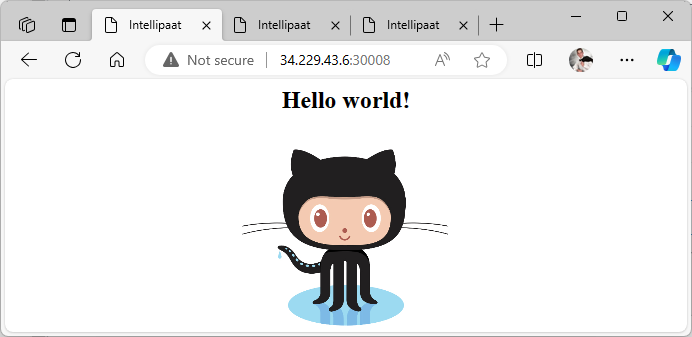

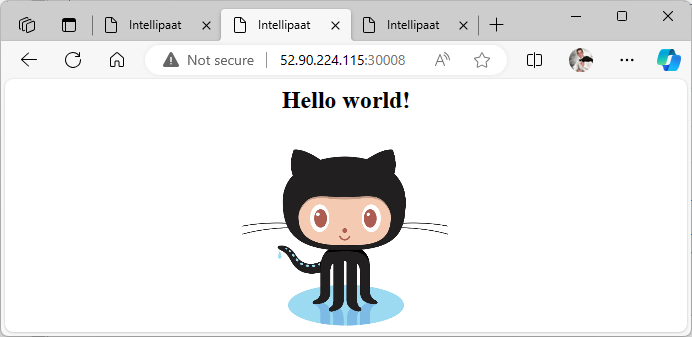

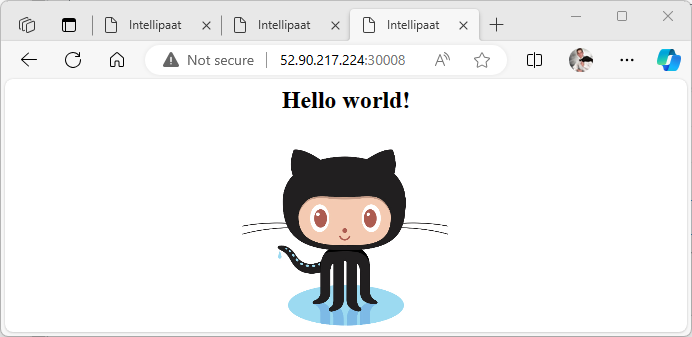

To verify that the service is accessible through the NodePort, I attempt to reach the service using the public IP address of any node in the Kubernetes cluster, which includes Kmaster, Kslave1, and Kslave2, followed by the NodePort number.

Success